The words figure and fictitious both derive from the same Latin root, fingere (to form, create). Beware!

--M.J. Moroney

The words figure and fictitious both derive from the same Latin root, fingere (to form, create). Beware!

--M.J. MoroneyAs engineers, you need to sensibly select technologies. Some exciting candidate technologies are too bleeding edge, or too slow or cumbersome to use in practice. And you have to decide which is which.

The next two lectures offer examples of this process. Note that in both case, the gaming industry uses technology (b) while theoreticians prefer (a).

In your career you'll have to strike your own balance between the limitations of the simpler method (b) vs the extra functionality offered by the more complex method (a).

The words figure and fictitious both derive from the same Latin root, fingere (to form, create). Beware!

--M.J. Moroney

The words figure and fictitious both derive from the same Latin root, fingere (to form, create). Beware!

--M.J. Moroney

Everything is vague to a degree you do not realize :till you have tried to make it precise. --Bertrand Russell

I'm pretty certain that I need better ways to handle uncertainty. Many expressions of human knowledge are not crisp ideas. Rather, they are hedges, shades of gray.

Consider four problems in programming a game:

The problem is that conventional principles of logic are very unfriendly towards uncertainty. In classical logic, things are either true or false. You do, you don't. Worse, if a model contains a single inconsistency, the whole theory is false. So one little doubt, one little "this or not this" and you are screwed. Not very helpful for introducing gradual degrees of hedged knowledge that may be contradictory.

.jpg/200px-Lotfi_A._Zadeh(2004).jpg) This is not just a game playing problem.

The drawbacks of classical logic are well known.

Theoreticians like Lofti Zadeh offer a Principle of Incompatibility :

This is not just a game playing problem.

The drawbacks of classical logic are well known.

Theoreticians like Lofti Zadeh offer a Principle of Incompatibility :

Philosophers like Karl Popper claim that accessing 100% evidence on any topic a fool's goal. All "proofs" must terminate on premises; i.e. some proposition that we accept as

true without testing, but which may indeed be wrong.

In terms of most human knowledge, a recursion to base premises is

fundamentally impractical. For example:

Philosophers like Karl Popper claim that accessing 100% evidence on any topic a fool's goal. All "proofs" must terminate on premises; i.e. some proposition that we accept as

true without testing, but which may indeed be wrong.

In terms of most human knowledge, a recursion to base premises is

fundamentally impractical. For example:

There is much evidence for Popper's thesis. Gathering all the information required to remove all uncertainties can be very difficult:

The (in)famous "Limits to Growth"

study attempted to predict the international effects of continued global economic growth. Less than 0.1% of the data required for the models was available

The (in)famous "Limits to Growth"

study attempted to predict the international effects of continued global economic growth. Less than 0.1% of the data required for the models was available

Experience with mathematical simulation suggest that an over-enthusiasm for quantitative analysis can confuse, rather than clarify, a domain. For systems whose parts are not listed in a catalog, which evolve together, which are difficult to measure, and which show unexpected capacity to form new connections, the results of (quantitative) simulation techniques have been less than impressive:

Anyway, why seek total knowledge? Some levels of uncertainty are a good thing:

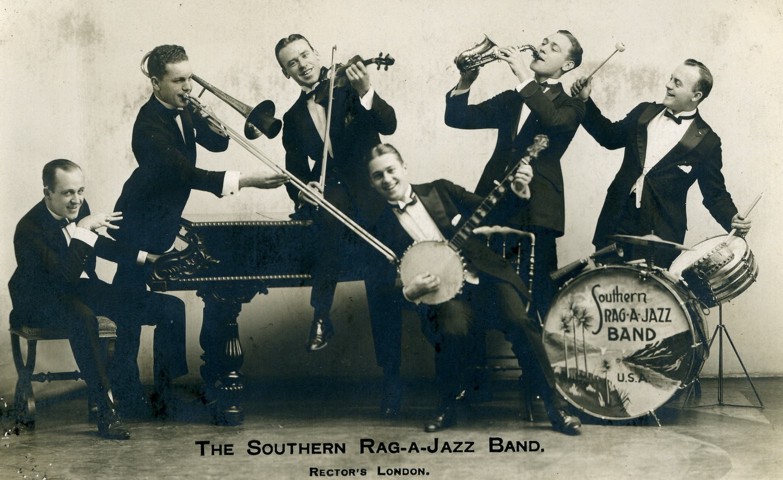

When co-ordinating multiple agents, it is good to leave some room for

local improvisation. Consider the phrase "close enough for jazz":

When co-ordinating multiple agents, it is good to leave some room for

local improvisation. Consider the phrase "close enough for jazz":

Sometimes we can learn more by allowing some uncertainties. Creative solutions and insights can sometimes be found in a loosely constrained space than may be impossible in tightly constrained, certain spaces. For example,

PROXIMITY

SIZE close medium far

-------- ----------------------

tiny medium low low

small high low low

moderate high medium low

large high high medium

So we need to change classical logic. Uncertainty is unavoidable. Despite this, we persist and continue building systems of increasing size and internal complexity. Somehow, despite uncertainty, we can handle uncertainty and doubt and predict the future a useful percent of the time. How do we do it? How can we teach AI algorithms to do it?

Officially, there are rules. Unofficially, there are shades of gray. Fuzzy logic is one way to

handle such shades of gray.

Officially, there are rules. Unofficially, there are shades of gray. Fuzzy logic is one way to

handle such shades of gray.

For example, consider the rule ``if not raining then play golf''. Strange to say, sometimes people play golf even in the rain. Sure, they may not play in a hurricane but a few drops of rain rarely stops the dedicated golfer.

Fuzzy logic tries to capture these shades of gray. Our golf rule means that we rush to play golf in the sunshine, stay home during hurricanes, and in between we may or may not play golf depending on how hard it is raining.

Fuzzy logic uses fuzzy sets. Crisp sets have sharp distinctions between true and falsehood (e.g. it is either raining or not). Fuzzy sets have blurred boundaries (e.g. it is barely raining). Typically, a membership function is used to denote our belief in some concept. For example, here are some membership functions for the belief that a number is positive. All these beliefs have the same membership function:

1/(1+exp(-1*x*crisp))

Or, in another form...

(defun crisp (x a b crisp)

"Return a point in a sigmoid function centered at (a - b)/2"

(labels ((as100 (x min max)

(+ -50 (* 100 (/ (- x min) (- max min))))))

(/ 1 (+ 1 (exp (* -1 (as100 x a b) crisp))))))

(defun fuzzy-grade (x &optional (a 0) (b 1) (slope 0.1))

(crisp x a b slope))

where the crisp parameter controls how sharp is the boundary in our beliefs:

Note that for large crisp values the boundary looks like classical logic: at zero a number

zaps from positive to negative.

However, in the case of our golfing rule, there are

shades of gray in between sunshine and hurricanes.

We might believe in playing golf, even when

there is a little rain around.

For these situations, our beliefs are hardly crisp, as modeled by setting

crisp to some value less than (say) one.

The crisp value lets us operationalize a set of linguistic variables or hedges such as ``barely'', ``very'', ``more or less'', ``somewhat,'' ``rather,'' ``sort of,'' and so on. In this approach, analysts debrief their local experts on the relative strengths of these hedges then add hedge qualifiers to every rule. Internally, this just means mapping hedges to crisp values. For example:

definitely: crisp=10 sort of: crisp=0.5 etc

Alternatively, analysts can ask the local experts to draw membership functions showing how the degrees of belief in some concept changes over its range. For example:

Sometimes, these can be mapped to mathematical functions as in the following:

(defun fuzzy-triangle (x a b c) (max 0 (min (/ (- x a) (- b a)) (/ (- c x) (- c b))))) (defun fuzzy-trapezoid (x a b c d) (max 0 (min (/ (- x a) (- b a)) 1 (/ (- d x) (- d c)))))

Note that the function need not be represented mathematically. If the local experts draw any shape at all, we could read off values from that drawing and store them in an array. For example, an analyst can construct an array of values for various terms, either as vectors or matrices. Each term and hedge can be represented as (say) a 7-element vector or 7x7 matrix. Each element of every vector and matrix a value between 0.0 and 1.0, inclusive, in what might be considered intuitively a consistent manner. For example, the term ``high'' could be assigned the vector

0.0 0.0 0.1 0.3 0.7 1.0 1.0

and ``low'' could be set equal to the reverse of ``high,'' or

1.0 1.0 0.7 0.3 0.1 0.0 0.0

The AND, OR, NOT operators of boolean logic exist in fuzzy logic, usually defined as the minimum, maximum, and complement; i.e.

[1] truth (not A) = 1.0 - truth (A)

[2] truth (A and B) = minimum (truth(A), truth(B))

[3] truth (A or B) = maximum (truth(A), truth(B))

Or, in another form...

(defmacro $and (&rest l) `(min ,@l)) (defmacro $or (&rest l) `(max ,@l)) (defun $not (x) (- 1 x))

When they are defined this way, the are called the Zadeh operators, because they were originally defined as such in Zadeh's original papers.

Here are some examples of the Zadeh operators in action:

[1] truth (not A) = 1.0 - truth (A)

[2] truth (A and B) = minimum (truth(A), truth(B))

[3] truth (A or B) = maximum (truth(A), truth(B))

Here are some more examples:

Here's yet another example

Some researchers in fuzzy logic have explored the use of other interpretations of the AND and OR operations, but the definition for the NOT operation seems to be safe.

Note that if you plug just the values zero and one into the definitions [1],[2],[3], you get the same truth tables as you would expect from conventional Boolean logic. This is known as the EXTENSION PRINCIPLE, which states that the classical results of Boolean logic are recovered from fuzzy logic operations when all fuzzy membership grades are restricted to the traditional set {0, 1}. This effectively establishes fuzzy subsets and logic as a true generalization of classical set theory and logic. In fact, by this reasoning all crisp (traditional) subsets ARE fuzzy subsets of this very special type; and there is no conflict between fuzzy and crisp methods.

Assume that we have some fuzzy sub membership functions for combination of TALL and OLD things defined as follows:

function tall(height) {

if (height < 5 ) return Zero;

if (height <=7 ) return (height-5)/2;

return 1;

}

function old(age) {

if (age < 18) return Zero;

if (age <= 60) return (age-18)/42;

return 1;

}

function a(age,height) { return FAND(tall(height),old(age)) }

function b(age,height) { return FOR(tall(height), old(age)) }

function c(height) { return FNOT(tall(height)) }

function abc(age,height) {

return FOR(a(age,height),b(age,height),c(height)) }

(The functions FNOR, FAND, FOR are the Zadeh operators.)

For compactness, we'll call our combination functions:

a = X is TALL and X is OLD

b = X is TALL or X is OLD

c = not (X is TALL)

abc= a or b or c

Here's the OLDness functions:

Here's the TALLness function:

Here's (c); i.e. the not TALLness function:

Here's (a): TALL and OLD

Here's (b): TALL or OLD

Here's (abc): a or b or c

Methodology, a fuzzy logic session looks like this:

Suppose we have an output fuzzy set AMOUNT which has three members: ZERO, MEDIUM, and HIGH. We assign to each of these fuzzy set members a corresponding output value, say 0 for Zero, 40 for MEDIUM, and 100 for HIGH. A Defuzzification procedure might then be:

return (ZERO*0 + MEDIUM*40 + HIGH*100)/(0+40+100)

How close is the enemy?

How large is the enemy's forces?

What is the size of the threat?

PROXIMITY

SIZE close medium far

-------- ----------------------

tiny medium low low

small high low low

moderate high medium low

large high high medium

We need some membership functions:

(defun dist (d what)

(case what

(range '(close medium far))

(close (fuzzy-triangle d -30 0 30))

(medium (fuzzy-trapezoid d 10 30 50 70))

(far (fuzzy-grade d 0.3 50 100))

(t (warn "~a not known to dist" what))))

(defun size (s what)

(case what

(range '(tiny small moderate large))

(tiny (fuzzy-triangle s -10 0 10))

(small (fuzzy-trapezoid s 2.5 10 15 20))

(moderate (fuzzy-trapezoid s 15 20 25 30))

(large (fuzzy-grade s 0.3 25 40))

(t (warn "~a not known to size " what))))

And we'll need to model the above table:

(defun fuzzy-deploy (d s what)

(macrolet (($if (x y) `($and (dist d ',x) (size s ',y))))

(case what

(range '(low medum high))

(low ($or ($if medium tiny)

($if far tiny)

($if medium small)

($if far small)))

(medium ($or ($if close tiny)

($if medium moderate)))

(high ($or ($if close small)

($if close moderate)

($if close large)

($if medium moderate)))

(t (warn "~a not known to fuzzy-deploy" what)))))

Finally, we need a defuzzication function:

(defun defuzzy-deploy (&key distance size)

"Return how many marines to deploy?"

(let ((low (fuzzy-deploy distance size 'low ))

(medium (fuzzy-deploy distance size 'medium))

(high (fuzzy-deploy distance size 'high )))

(round

(/ (+ (* 10 low) (* 30 medium) (* 50 high))

(+ low medium high)))))

So, how many marines to deploy?

(defuzzy-deploy :distance 25 :size 8)) ==> 19

(What I'm about to say seemed like a good

idea at the time- early 1990s. But most folks who took this seriously

ran into computational problems and had to switch from discrete-space

logic to some continuous variant. However, IMHO,

recent empirical stochastic

results suggest that this all might be worth a second look.

And, much to my surprise,

I see that

others think the same.

Also, technically speaking, what I'm about to show you is my own local

variant of abduction called graph-based abduction that comes

from my

1995 Ph.D. thesis.

For a more general treatment, that is more

standard, see

Bylander T., Allemang D., Tanner M.C., Josephson J.R.

The computational complexity of. abduction, Artificial Intelligence Journal

49(1-3), pp. 25-60, 1991.)

Republicans aren't pacificists by quakers are. Is Nixon (who was both a republican and a quaker) a pacificist?

This is a horrible problem. Observe that it can't be solved by sitting at the Nixon note and querying his immediate parents. You have to search into the network for contradictiory conclusions. That is, it can't be solved by some fast local propagation algorithm.

If you can't gather more information, you have two options:

Alternatively, if we are allowed to probe the world for more information, we can:

That is, unlike fuzzy logic (where everything is quickly inferred from hard-wired numbers set at design time), here we must extract and explore the topology of our doubts, then probe the key points.

Formally, this is abduction.

Welcome to abduction, the logic of "maybe". Abduction can be

contrasted with induction and deduction as follows:

Welcome to abduction, the logic of "maybe". Abduction can be

contrasted with induction and deduction as follows:

rules + antecedents ==> consequences

<antecesdent,consequence> <antecesdent,consequence> <antecesdent,consequence> <antecesdent,consequence> ==> rules

rules + consequences ==> antecedentsNote that abduction is not a certain inference since there may exist multiple rules that explain the cosnequence. For example:

In practice, all these methods run together:

(That's the official story. My own experience is that by the time you get an abductive device going, you have much of the machinery required for deduction and induction. But don't tell anyone,)

Formally, abduction looks like this:

Without rule2, abduction becomes deduction:

With rule2, abduction gets very slow:

That is, when the accountants run the race,

rules 1 & 2 have to be modified:

Assumptions define worlds of belief.

Assumptions come in two forms:

Note that the least informative assumptions are the non-base, non-controversial assumption (the yawn set).

(BTW, is your head spinning yet? Don't you wish we were still doing fuzzy logic?)

An example makes all this clear. Here's a theory:

In this example, our inputs are:

Our goals are to explain the outputs:

We have some background knowledge

The above theory supports the following consistent chains of reasons that start at the inputs and end at the outputs:

In the above, companyProfits=up and companyProfits=down are contraversial assumptions. They are also base since they depend on no upstream contraversial assumptions.

We say that one world of belief is defined by each maximal consistent subsets of the base contraversial assuumptions. By collecting together chains of reasons consistent with each such subset, we find two worlds:

So our example leads us to two possibilities:

Each world is internally consistent (by definition) so predictions can be made without checking for inconsistencies. So, ignoring the world generation cost (which can be scary), the subsequent prediction cost is very cheap.

Note the connection of abduction to dediction and induction.

When multiple worlds can be generated and a best operator selects the preferred world: i.e.

Different applications for abduction arise from different best operators: For example, classification is just a special case of prediction where some subset of the goals have special labels (the classes).

Another special case of prediction is validation where we score a theory by the maximum of the number of goals found in any world. This is particularly useful for assessing theories that may contain contradictions (e.g. early life cycle requirements).

Yet another special case of prediction is planning. Here, we have knowledge of how much it costs to use part of the theory and planning-as-abduction tries to to maximize coverage of the goals while minimizing the traversal cost to get to those goals. Note that, the directed graph in the generated worlds can be interpreted as an order of operations.

Monitoring can now be built as a a post-processor to planning. Once all the worlds are generated, we cache them (? to disk). As new information arrives, any world that contradicts that new data is deleted. The remaining worlds contain the remaining space of possibilities.

Minimal fault diagnosis means favoring worlds that explain most diseases (goals) with the fewest inputs; i.e.

Probing is a special kind of diagnoistic activity that seeks the fewest tests that rule out the most possibilties. In this framework, we would eschew probes to non-contraversial assumptions and favor probes to the remaining base assimptions.

The list goes on. Explanation means favoring worlds containing ideas that the user has seen before. This implies maintaining a persistent user profile storing everything the user has seen prior to this session with the system.

Tutoring is a combination of explanation and planning. If the best worlds we generate via explanation are somehow sub-optimum (measured via some domain-specific criteria) we use the planner to plot a path from the explainable worlds to the better worlds. This path can be intepreted as a lesson plan.

The list goes on. But you get the idea. We started with uncertainty and got to everything else.

Managing uncertainty is a core process in many tasks.

Abduction as a unifying principle for implementing uncertain reasoning.

One Ring to rule them all, One Ring to find them, One Ring to bring them all and in the darkness bind them

Well, we all know what comes next...

Abduction belongs to a class of problems called NP-hard. That is, there no known fast and complete algorithm for the general abductive problem. And we say that after decades of trying to find one.

So if everything is abduction then everything is hard. Hmmm... sounds about right.

But I gave up on abduction when all my abductive inference engines ran into computational walls. The following runtimes come from validation-as-abduction using an interepreted language on a 1993 computer. But the general shape of this graph has been seen in other abductive inference engines.

But I'm starting to think I should come back to abduction. Here's one experiment with an ISSAMP-style world generation method for validation-as-abduction. Repeatedly, this adbuctive inference engine built one consistent world, making random choices as it went. The algorithm terminated when new goals stopped appearing in the generated worlds. This algorithm ran in polynomical time (as apposed to the complete validation-as-abduction method shown on the left.

It is hardly surprising that an incomplete random search runs faster than a complete one. But the real significant finding was that in the region where both algorithms terminated, the random search found 98\% of the goals found by the complete search.

Why? Well, that's another story for another time but if you are interested, this (which is an ok paper) this (which is a really nice paper).

By now you probably have a pretty strong view on the relative merits of fuzzy logic or abduction (implementation complexity, generality, etc). So what are you going to use in your commercial AI work?

Well, that depends. If you are just playing games then what-the-hell, use fuzzy logic.

And if you are trying to diagnoise potentially life threatenning diseases using the lesat cost and fewest number of invasive procedures, you might consider the complexity of abduction well worth the implementation effort.

But you're engineers- you decide.