(From LtU Classic Archives.)

Does the controversial Sapir-Whorf Hypothesis or theory of "linguistic relativity" that has been proposed for human languages hold for programming languages and the programmers who use them?

"Linguistic relativity" is the

"The Sapir-Whorf Hypothesis is the most famous. Benjamin Whorf's hypothesis had two interpretations, the first is called determinism ["people's thoughts are determined by the categories made available by their language"], and the second is labeled relativism ["differences among languages cause differences in the thought of their speakers"..... "

Do the language features of the programming languages a programmer uses as a computer science student determine the ideas that a programmer is able to use and understand as a professional programmer?

Kenneth E. Iverson, the originator of the APL programming language, believed that the Sapir-Whorf hypothesis applied to computer languages (without actually mentioning the hypothesis by name). His Turing award lecture, Notation as a tool of thought, was devoted to this theme, arguing that more powerful notations aided thinking about computer algorithms. The essays of Paul Graham explore similar themes, such as a conceptual hierarchy of computer languages, with more expressive and succinct languages at the top.

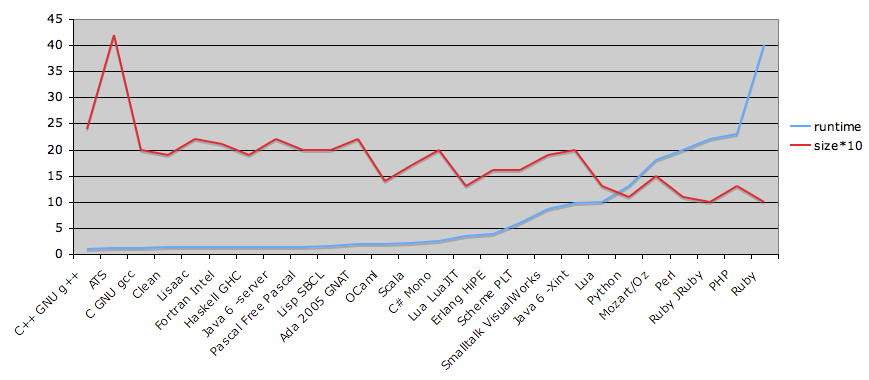

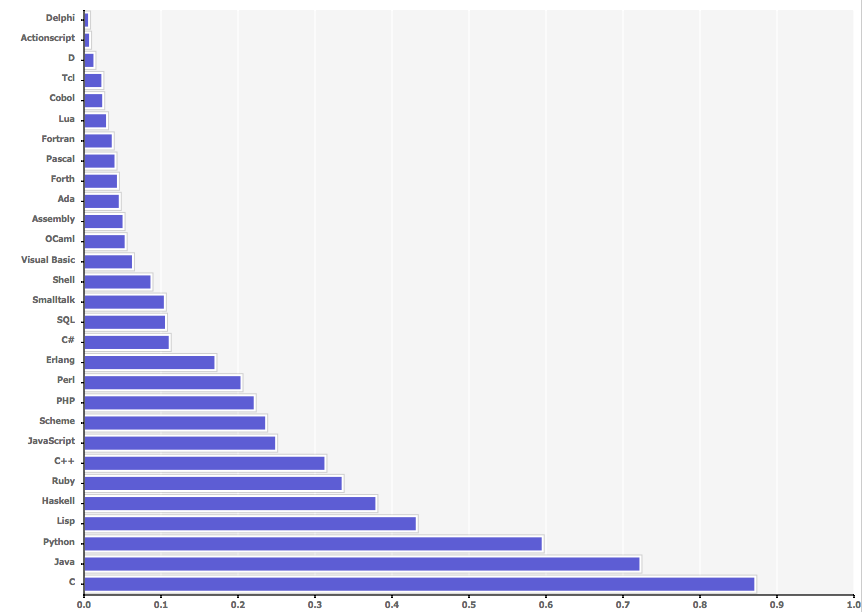

I used to believe in Sapir-Whorf and wrote a lot about domain-specific programming languages (where the language was tuned to the idioms of the local experts). Years later, I have yet to see the evidence that it is indeed a productive approach (indeed, other factors may be far more important). For example, if we ask the question "which language is fastest to run or easiest to use?", we get contradictory answers. It turns out that "fastest" and "easiest" are almost mutually exclusive (faster languages are longer to write and vice versa):

(Data from benchmarks at the Great Language Shootout).

Note the trade-off between lines of code of the function and its runtime: halving the lines of code (from two KB to one) can increase the runtimes by an order of magnitude, or more.

Perhaps we should not ask what language is best. In terms of earning a pay cheque, a more important question is "what language will someone else pay me to use?".

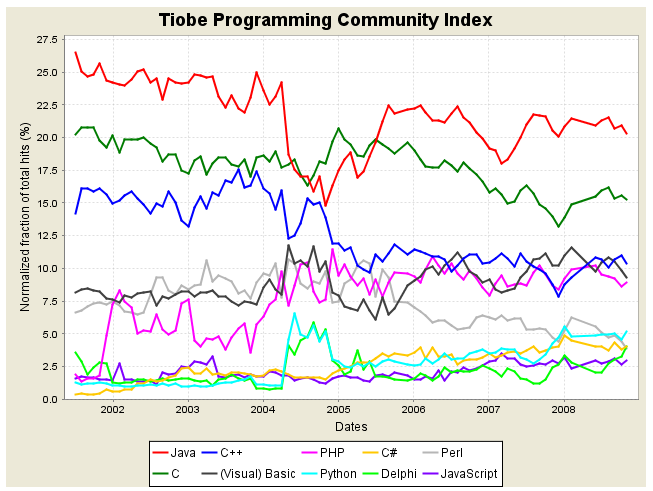

Depnding on how you ask this question, you get different answers. The TIOBE web site has has its own magic indexes for comapring langauges.

(The May'04 Java dip is due to a Google purge of suspect sites from its web indexes. In response, TIOBE added other search engines to its sampling.)

Note that:

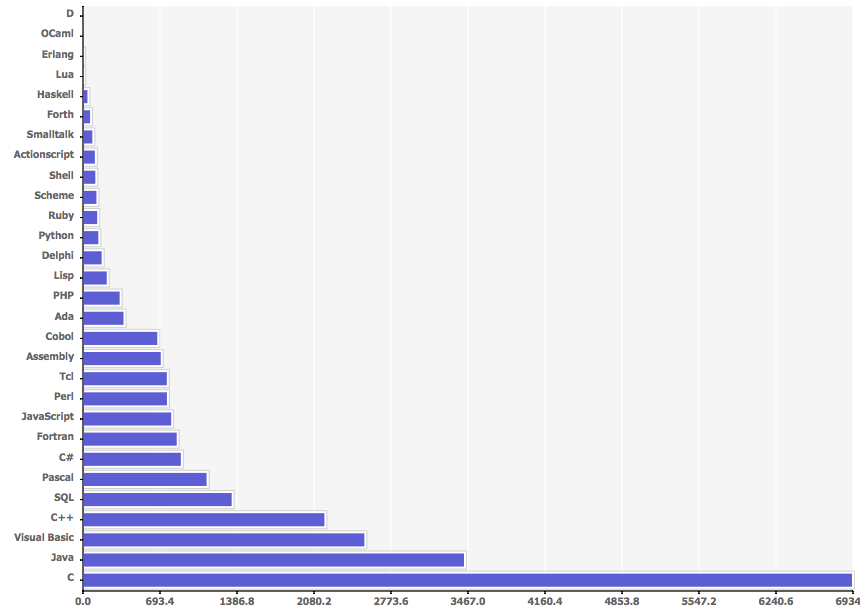

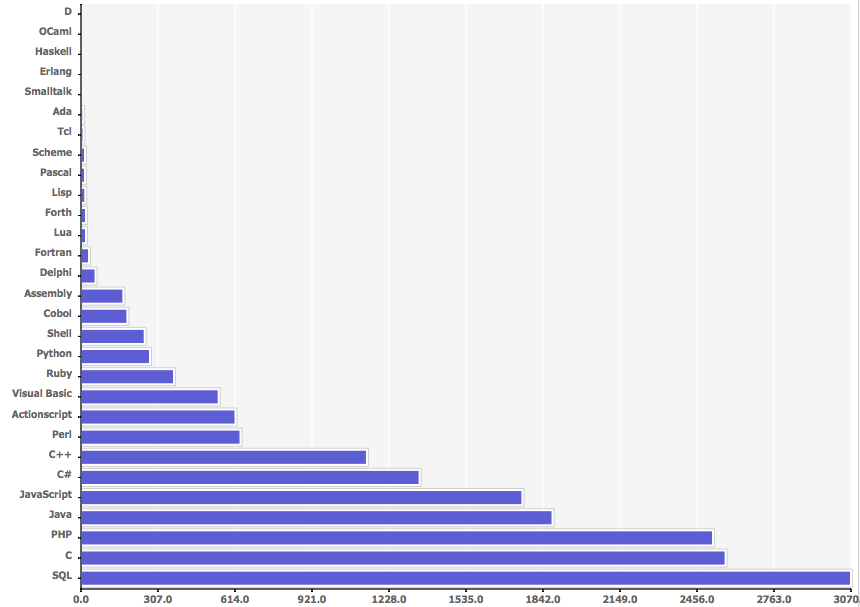

The method used to generate the above ratings have been seriously questioned. We need not explore those issues here except to say that comparatively assessing different languages is a very difficult (?impossible). Also, it is important to look at more than just the TIOBE ratings. For example, to find out what languages are most used commercially, we might ask Amazon.com what the books it sells. Here is a breakdown of the number of titles describing different languages:

As before, JAVA, "C", "C++" "Visual Basic" are the heavy hitters but even take together, these are used less than the others.

But that could be a bogus measure. Instead of asking "what can be read?", it might be better to ask "what languages are people making notes about"? This second measure could be more informative since some effort is required by the reader to make such a note. Delicious.com oblidges:

Again, JAVA, and "C" feature prominently by now some weirdo languages are looking popular (e.g. LISP, HASKELL).

Yet another way to ask this question is "what languages are seen in job advertisements?". Here is a Nov 2,2008 survey of languages mentioned in jobs seeking programmers on Craig's List:

SQL is the heavy hitter? What the??? It did not even appear on the above graphs.

Anyway, one thing is clear: on the balance of probabilities, you will not be spending your professional life working on one language. Rather, you will be using any number of languages during your career. For example, here's a recent chat I had with someone working in a Silicon Valley start-up:

This is why this subject is important. Here, we try to teach general principles that apply to multiply languages. Hopefully this means that, in the future, you will be able to learn new langauges, as required.

I should be able to write a test in any language. --J. Random Hacker

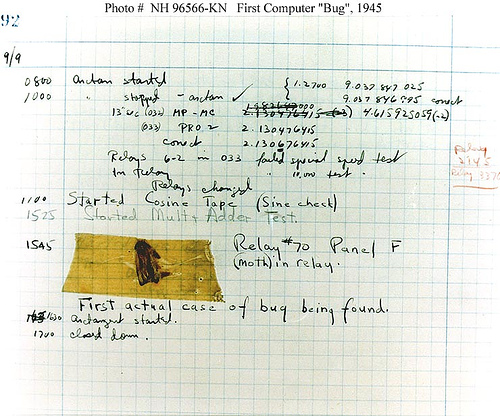

Every programming has to be debugged:

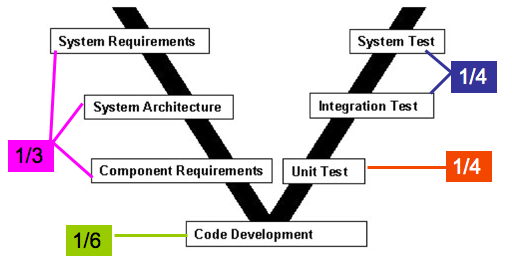

Wilkes is pointing out that the time required to debug a system is surprisingly large- over half the effort of building. According to Brooks (The Mythical Man Month, 1995), the time required to build software divides as follows:

Decades later, the same distribution persists. It looks like this:

What this diagram is saying is that if you want to drastically change the economics of software development, do something about testability. So in this subject, when we start with a new language, we start with the test engine.

Scientific applications

Business applications

Artificial intelligence

Systems programming

Web Software

Readability: the ease with which programs can be read and understood

Writability: the ease with which a language can be used to create programs

Cost: the ultimate total cost

Maintainability: each concept written in one place, once (e.g. normalized database data)

Reliability: conformance to specifications (i.e., performs to its specifications)

The rest of this subject is dividied up into the above four paradigms.