| [ << ] | [ < ] | [ Up ] | [ > ] | [ >> ] | [Top] | [Contents] | [Index] | [ ? ] |

(This lecture comes from the excellent lectures of Anthony A. Aaby).

A computer constructed from actual physical devices is termed an actual computer or hardware computer. From the programming point of view, it is the instruction set of the hardware that defines a machine. An operating system is built on top of a machine to manage access to the machine and to provide additional services. The services provided by the operating system constitute another machine, a virtual machine.

A programming language provides a set of operations. Thus, for example, it is possible to speak of a Java computer or a Haskell computer. For the programmer, the programming language is the computer; the programming language defines a virtual computer. The virtual machine for Simple consists of a data area which contains the association between variables and values and the program which manipulates the data area.

Between the programmer’s view of the program and the virtual machine provided by the operating system is another virtual machine. It consists of the data structures and algorithms necessary to support the execution of the program. This virtual machine is the run time system of the language. Its complexity may range in size from virtually nothing, as in the case of FORTRAN, to an extremely sophisticated system supporting memory management and inter process communication as in the case of a concurrent programming languages.

User programs constitute another class of virtual machines.

A language translator is a program which translates programs from source language into an equivalent program in an object language. The source language is usually a high-level programming language and the object language is usually the machine language of an actual computer. From the pragmatic point of view, the translator defines the semantics of the programming language, it transforms operations specified by the syntax into operations of the computational model—in this case, to some virtual machine. Context-free grammars are used in the construction of language translators. Since the translation is based on the syntax of the source language, the translation is said to be syntax-directed.

A compiler is a translator whose source language is a high-level language and whose object language is close to the machine language of an actual computer. The typical compiler consists of an analysis phase and a synthesis phase.

In contrast with compilers an interpreter is a program which simulates the execution of programs written in a source language. Interpreters may be used either at the source program level or an interpreter may be used it interpret an object code for an idealized machine. This is the case when a compiler generates code for an idealized machine whose architecture more closely resembles the source code.

There are several other types of translators that are often used in conjunction with a compiler to facilitate the execution of programs. An assembler is a translator whose source language (an assembly language) represents a one-to-one transliteration of the object machine code. Some compilers generate assembly code which is then assembled into machine code by an assembler. A loader is a translator whose source and object languages are machine language. The source language programs contain tables of data specifying points in the program which must be modified if the program is to be executed. A link editor takes collections of executable programs and links them together for actual execution. A preprocessor is a translator whose source language is an extended form of some high-level language and whose object language is the standard form of the high-level language.

The typical compiler consists of several phases each of which passes its output to the next phase

(BTW, contrast the complexity of the above with the simplicity of LISP for defining new languages. When is the above complexity required? Avoidable?)

The scanner groups the input stream (of characters) into a stream of tokens (lexeme) and constructs a symbol table which is used later for contextual analysis. The lexemes include

The lexical phase (scanner) groups characters into lexical units or tokens. The input to the lexical phase is a character stream. The output is a stream of tokens. Regular expressions are used to define the tokens recognized by a scanner (or lexical analyzer). The scanner is implemented as a finite state machine.

Lex and Flex are tools for generating scanners is C. Flex is a faster version of Lex.

Example Lex file

INPUT:

abc123z.!&*2ghj6

ANALYZER

/*** Definition section ***/

%{

/* C code to be copied verbatim */

#include <stdio.h>

%}

/* This tells flex to read only one input file */

%option noyywrap

%%

/*** Rules section ***/

/* [0-9]+ matches a string of one or more digits */

[0-9]+ {

/* yytext is a string containing the matched text. */

printf("Saw an integer: %s\n", yytext);

}

. { /* Ignore all other characters. */ }

%%

/*** C Code section ***/

int main(void)

{

/* Call the lexer, then quit. */

yylex();

return 0;

}

OUTPUT:

Saw an integer: 123 Saw an integer: 2 Saw an integer: 6

The parser groups tokens into syntactical units. The output of the parser is a parse tree representation of the program. Context-free grammars are used to define the program structure recognized by a parser. The parser is implemented as a push-down automata.

Yacc and Bison are tools for generating bottom-up parsers in C. Bison is a faster version of Yacc. Jack is a tool for generating scanners and top-down parsers in Java.

Example parse tree for a simple English sentence

In addition to a data stream passing through the phases of the compiler, additional information acquired during a phase may be needed by a later phase. The symbol table is used to store the names encountered in the source program and relavant attributes. The information in the symbol table is used by the semantic checker when applying the context-senitive rules and by the code generator. The error handler is used to report and recover from errors encountered in the source.

Contextual checkers analyze the parse tree for context-sensitive information often called the static semantics. The output of the semantic analysis phase is an annotated parse tree. Attribute grammars are used to describe the static semantics of a program.

This phase is often combined with the paser. During the parse, information concerning variables and other objects is stored in a symbol table. The information is utilized to perform the context-sensitive checking.

The data structure passed between the analysis and synthesis phases is called the intermediate representation (IR)of the program. A well designed intermediate representation facilitates the independence of the analysis and syntheses (front- and back-end) phases. Intermedate representations may be

Restructuring the parse tree to reduce its size or to present an equivalent tree from which the code generator can produce more efficient code is called optimization.

It may be possible to restructure the parse tree to reduce its size or to present a parse to the code generator from which the code generator is able to produce more efficient code. Some optimizations that can be applied to the parse tree are illustrated using source code rather than the parse tree.

From:

while (count < limit) do

INPUT SALES;

VALUE := SALES * ( MARK_UP + TAX );

OUTPUT := VALUE;

COUNT := COUNT + 1;

end;

to:

TEMP := MARK_UP + TAX;

while (COUNT < LIMIT) do

INPUT SALES;

VALUE := SALES * TEMP;

OUTPUT := VALUE;

COUNT := COUNT + 1;

end;

Most program time is spent in the body of loops so loop optimization can result in significant performance improvement. Often the induction variable of a for loop is used only within the loop. In this case, the induction variable may be stored in a register rather than in memory. And when the induction variable of a for loop is referenced only as an array subscript, it may be initialized to the initial address of the array and incremented by only used for address calculation. In such cases, its initial value may be set

From:

For I := 1 to 10 do

A[I] := A[I] + E

to:

For I := address of first element in A

to address of last element in A

increment by size of an element of A do

A[I] := A[I] + E

From:

A := 6 * (B+C);

D := 3 + 7 * (B+C);

E := A * (B+C);

to:

TEMP := B + C;

A := 6 * TEMP;

D := 3 * 7 * TEMP;

E := A * TEMP;

a*b + a*c --> a*(b+c)

a - b --> a + ( - b )

The code generator transforms the intermediate representation into object code using rules which denote the semantics of the source language. These rules are define a translation semantics.

The code generator’s task is to translate the intermediate representation to the native code of the target machine. The native code may be an actual executable binary, assembly code or another high-level language. Producing low-level code requires familiarity with such machine level issues such as

The code generator may be integrated with the parser.

As the source program is processed, it is converted to an internal form. The internal representation in the example is that of an implicit parse tree. Other internal forms may be used which resemble assembly code. The internal form is translated by the code generator into object code. Typically, the object code is a program for a virtual machine. The virtual machine chosen for Simp consists of three segments. A data segment, a code segment and an expression stack.

The data segment contains the values associated with the variables. Each variable is assigned to a location which holds the associated value. Thus, part of the activity of code generation is to associate an address with each variable. The code segment consists of a sequence of operations. Program constants are incorporated in the code segment since their values do not change. The expression stack is a stack which is used to hold intermediate values in the evaluation of expressions. The presence of the expression stack indicates that the virtual machine for Simp is a “stack machine”.

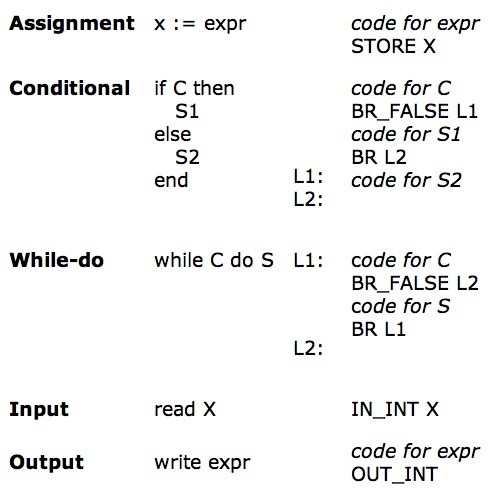

The assignment, if, while, read and write statements are translated as follows:

Peephole optimizers scan small segments of the target code for standard replacement patterns of inefficient instruction sequences. The peephole optimizer produces machine dependent code improvements. It works by recognising sets of instructions that don’t actually do anything, or that can be replaced by a leaner set of instructions.

Replace slow operations with faster ones. E.g.

a = b + c; d = a + e;

is straightforwardly implemented as

MOV b, R0 # Copy b to the register ADD c, R0 # Add c to the register, the register is now b+c MOV R0, a # Copy the register to a MOV a, R0 # Copy a to the register ADD e, R0 # Add e to the register, the register is now a+e [(b+c)+e] MOV R0, d # Copy the register to d

but can be optimised to

MOV b, R0 # Copy b to the register ADD c, R0 # Add c to the register, which is now b+c (a) MOV R0, a # Copy the register to a ADD e, R0 # Add e to the register, which is now b+c+e [(a)+e] MOV R0, d # Copy the register to d

In essence, why move "a" around? Just use it in place.

Furthermore, if the compiler knew that the variable a was not used again, the middle operation could be omitted.

Replace several operations with one equivalent.

Use algebraic laws to simplify or reorder instructions.

Use instructions designed for special operand cases.

Use address modes to simplify code.

Note: peephole optimization can be very machine dependent!

| [ << ] | [ < ] | [ Up ] | [ > ] | [ >> ] | [Top] | [Contents] | [Index] | [ ? ] |

This document was generated on April 19, 2011 using texi2html 5.0.